Achieving and maintaining GAMP® 5 compliance: IMA Active’s risk-based approach to software development and verification

1. Introduction

Founded in 1961 in Bologna (Italy), I.M.A. Industria Macchine Automatiche S.p.A. is the world leader in the design and production of automatic machines for the processing and packaging of pharmaceuticals, cosmetics, food, tea and coffee. The Group’s organisational structure is based on the production and commercial divisions that make up the business: IMA Pharma (processing and packaging in the pharmaceutical sector), IMA Dairy & Food (packaging in the food sector), Extra Pharma companies (packaging of tea, herbal infusions, coffee, drinks, cosmetics and toiletries). IMA Active division, one of the Group’s three pharmaceutical brands, is specialized in the design and manufacturing of machines and systems for processing and manufacturing of oral solid products. In order to put on the market products that meet the requirements of the Customers, IMA Active must always be conscious of the requirements issued by the regulatory authorities to ensure all critical equipment is capable of being implemented to meet validation requirements and thus ensuring patients safety, product quality and data integrity.

Of the reference standards and methods, the GAMP® (Good Automated Manufacturing Practices) guidelines have the purpose to interpret the validation requirements and apply them to all aspects linked either directly or indirectly to pharmaceutical product quality. In particular, GAMP® 5 (“A Risk-Based Approach to Compliant GxP Computerized Systems”) guidelines introduce the concept of Risk Management for automated and computerised systems, aimed at focusing validation and control only where really necessary, identifying the functions and processes that are most at risk for the pharmaceutical product. As automatic machine supplier operating in the pharmaceutical sector, IMA Active develops its own equipment according to GAMP® 5 principles and framework. Given the growing level of automation, functions and processes previously managed by mainly mechanical devices are now carried out with the aid of software components, which therefore take on increasing importance, as the aim is to guarantee the quality of products and process control even where a computerized system takes the place of a manual operation.

In the development of the latest machine models (Prexima and Mylab), particular attention has therefore been paid to software design and a multi-phase project has been devised, including Risk Assessment and Control, which is described in this article. The process involved the collaboration of various professional figures, including members of the Quality Assurance Department, software engineers, validation and Risk Assessment experts, co-workers from research laboratories and University of Bologna, as shown in Figure 1.

Figure 1

2. Case Study: Prexima and Mylab

The project focused on the software of IMA Active’s two latest machine models: Prexima and Mylab. Prexima is an automatic rotary tablet press for producing single layer tablets; it has a gravimetric powder feeding system and allows all production volumes to be processed. Mylab is a multifunction system used to carry out granulation and/or core coating processes. It is a compact laboratory system, designed for R&D applications and for small product batches. In order that the software used in these machines can be classified as non-configured and belonging to Category 3, as suppliers we have had to carry out a series of activities, listed below.

1. Study prior to Risk Assessment

Before carrying out the Risk Assessment, it was necessary to understand the context of application and make an in-depth study of the software architecture of the two machine models aim of the project.

The software architectures of Prexima and Mylab are made up of two macro systems: Machine Control and User Interface, both designed and implemented in-house.

The Machine Control software processes all machine movements, the phases, the data coming from the sensors and the specific functions of the machine. In the Prexima it is installed on a PC, while in the Mylab it is installed on a PLC.

The HMI software, on the other hand, is the means of communication between the machine and the operator. It manages data flows to and from the Machine Control and displays them on the monitor; it also collects the data and statistics regarding product quality. It is installed on a PC.

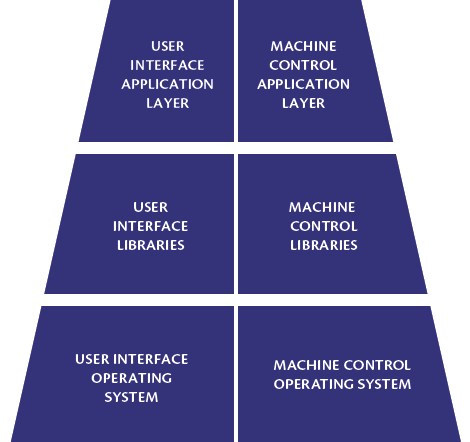

Figure 2 shows a schematic representation of the two macro systems. Both macro systems are made up of the following software layers: operating system (present only on Prexima, as on Mylab the Machine Control is installed on PLC), libraries and application layer. The libraries implement the functions shared by all machine models. The application layer, on the other hand, is specific to the machine model and, through the libraries, it implements the main functions for which Machine Control and User Interface are responsible.

The layers object of the Risk Assessment were libraries and application layer only, whereas for the operating system no further action was envisaged, as it was accepted by virtue of successful widespread use.

Figure 2

2. Construction of Risk Assessment and Risk Control tables

The method used for the Risk Assessment and Risk Control is the FMECA (Failure Mode Effect and Criticality Analysis), one of the standard methodologies put forward by guideline ICH Q9.

Based on the steps provided by the FMECA, the critical issues of a process or product are analysed as follows:

- the various failure modes are assessed (hazards), the severity of their effects on the system, the probability of their occurrence and whether or not there are controls in place to detect the failure modes (Risk Identification/Risk Analysis);

- quantitative values are assigned to the severity, occurrence and detection ratings to calculate the Risk Priority of each failure mode analysed (Quality Risk Evaluation);

- depending on the Risk Priority Number, activities are identified and any corrective actions to be taken are established to make the risk level acceptable (Risk Control).

Given that the object of the Risk Assessment and Control application in this project is the software, the hazards considered do not concern broken or damaged components but software behaviour (bugs) unforeseen or not assessed by the designer.

The goal of this phase was to define objective evaluation scales, with a view to avoid the arbitrary attribution of values by the team carrying out the analysis. From this phase onwards, collaboration with various professional figures was therefore essential: software designers, as in possession of the required expertise on the software in the machine models; validation experts, as informed of the documentation required by the end customer; co-workers from research laboratories and the University of Bologna, updated with the very latest software design methodologies; and the Quality Assurance Department, due to their expertise in the Risk Assessment methodology.

Given the different characteristics of the Machine Control and User Interface macro systems, it was necessary to make specific choices to define the evaluation scales and assign values to the three parameters:

- Severity: the impact of the hazards considered on patient health and/or data corruption was taken into account. In particular, the focus was on the meaning of the generic term “data corruption” within the context of computerised systems (data consistency and integrity).

- Probability: a preliminary study was carried out on the mode in which the probability of software containing bugs was calculated. It was found that two main factors affect probability: maturity and complexity. The more mature the software, the lower the probability of there being bugs, as the software has been widely used over time by numerous users. As for complexity, it can depend on various factors, such as the type of programming language used and the foundations on which it is based. Different evaluation scales of complexity present in the literature were studied and then adapted to the automation context. In particular, for the Machine Control only cyclomatic complexity was considered, whereas for the User Interface several additional indicators specific to the language used (object oriented) were necessary, such as Coupling Between Object Class and Depth of Inheritance.

- Detection: the study of this parameter required the examination of all the instruments capable of providing evidence of a hazard or its underlying cause, both from the point of view of the software developer and that of the end user (for example, error trace or error message visible on User Interface).

The scale chosen for all three of the parameters listed above has three levels: High, Medium and Low.

3. Execution of the Risk Assessment and calculating the Risk Priority

Software consists of modules that implement various functionalities and have been analysed individually. For each of these all the possible hazards were listed along with the effects they could have on the product, the integrity of the data and on the patient’s health. In particular, the main hazards encountered for Machine Control had an impact on patient health, whereas for the User Interface risks relating to data integrity and consistency were more common.

Based on the assessment scales, values of severity, occurrence and detection were given for each hazard-effect pair and the Risk Priority was calculated, as suggested by the tables in chapter 5.4 of GAMP® 5. Depending on the Risk Priority that emerged for each pair, the relative actions were taken.

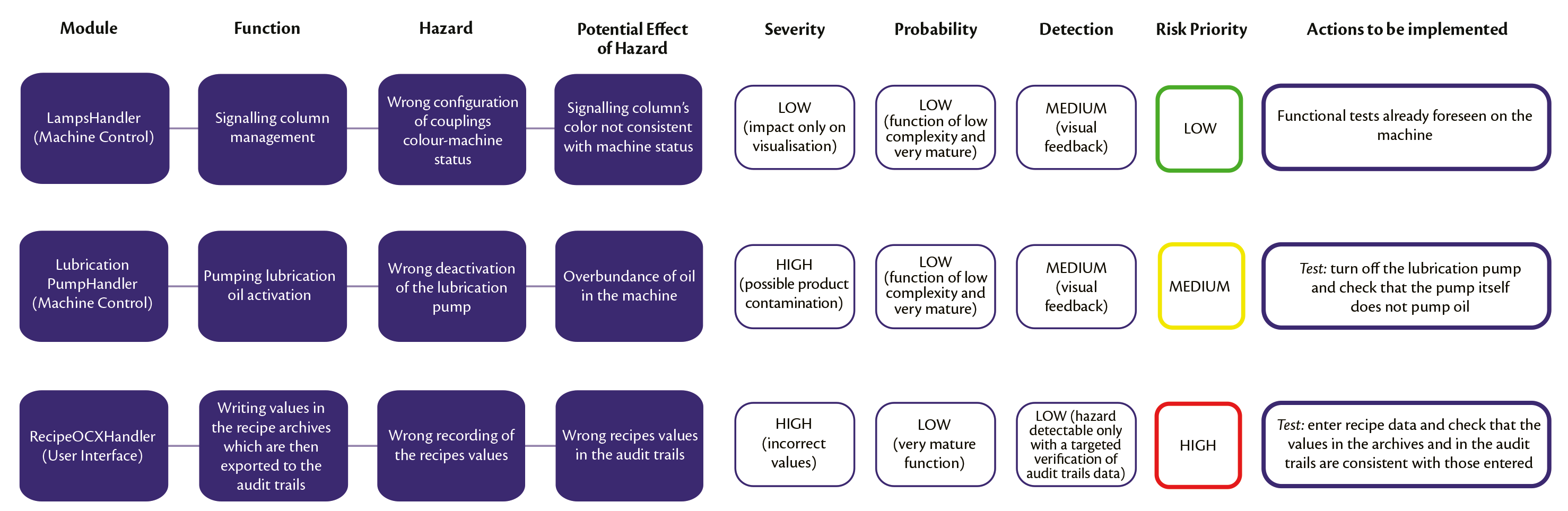

Figure 3 shows an example of the Analysis carried out on three different modules (two belonging to the Machine Control and one to the User Interface) with resulting different Risk Priorities (for the sake of simplicity, a single example of a hazard is shown for each module).

Figure 3

4. Identifying the actions to be undertaken according to the Risk Priority

Depending on the Risk Priority levels, a series of activities were identified to be carried out on the modules analysed. For Risk Priority modules, no additional action was taken other than the tests normally performed during internal testing on the machine (e.g. check the correct generation of the reports depending on the machine type, verify the presence and correctness of the production charts). Modules with a medium Risk Priority were evaluated on a case by case basis, performing any corrective actions such as a code-review to eliminate macro errors potentially present in the code, or targeted tests. For high Risk Priority modules activities were carried out aimed at lowering the Risk Priority.

In particular, for these modules an assessment was made of whether to carry out dedicated tests or to intervene directly on the software, for example to lower the probability of a hazard occurring or to increase detection by adding specific controls.

For the dedicated tests, the goal was to reproduce the system status by simulating the hazard presumed in the Risk Assessment and to check the response, consequently correcting any errors found in the software. All tests were fully documented and the results obtained were noted.

If a particularly large module was found to be high risk and therefore involved a very long series of activities, the decision was taken to separate it during the analysis phase into its underlying functions, so as to isolate the most critical ones and focus activities solely on them. Choosing the granularity (module or function) with which software was separated did not follow a fixed rule; the developer in the analysis team decided based on context and type of software.

5. Maintenance of analysis tables following software updates and/or changes

The inherent nature of software is that it undergoes continual evolution for the purposes of improvement, because of mechanical and electrical modifications and because of the addition of new machine functions; this means that Risk Assessment and Control is an instrument which must also be in evolution, to be updated at the same place as the actual software itself. Therefore, for every software version issued after the first one analyzed, it is necessary to update also the Risk Assessment and Control table, reviewing the modules that have been modified or added and those on which the modifications or additions have impact. This way, each existing software version has its respective Risk Assessment and Control table.

3. Conclusions

The project described here had a variety of benefits.

Firstly, resources were optimised: following the Risk Assessment, resources were able to focus on the most at-risk modules and functions, directing corrective actions solely where necessary. In particular, of all the modules analyzed, approximately 20% were high risk (almost entirely application layer modules developed from scratch for new machine models) and approximately 30% were medium risk (80% of which were application layer modules and the remaining 20% library modules shared by all machines). Consequently, the greatest efforts were focused on approximately 50% of the software, instead of the software in its entirety.

Another benefit of the activity was an improvement in software quality. Following the Risk Assessment results, the corrective actions implemented also included software changes to eliminate errors, add further controls (thus increasing hazard detection) or simplify software functionality (reducing its complexity).

Furthermore, by using the aforementioned methodology, the analysis team was directed to evaluate hazards not only from the point of view of software functionality, but above all from that of product quality and data integrity and consistency. In addition, the Risk Priority resulting from the Assessment is objective, thanks to the method of constructing the evaluation scales of the Severity, Probability and Detection parameters.

Given that the Risk Assessment and Control tables are always aligned to the software revisions, they are also a key document tool. Having available all the scenarios regarding the various hazards taken into consideration and the reasoning made, if software changes need to be implemented even by a different designer from the one who wrote the original software, it will be possible to implement them more quickly and accurately.

References

[1] ICH Q9 – Quality Risk Management, International Conference on Harmonisation, Geneva, Switzerland, 2005, www.ich.org

[2] GAMP® 5: A Risk-Based Approach to Compliant GxP Computerized Systems, International Society for Pharmaceutical Engineering (ISPE), 2008, www.ispe.org/gamp-5

Paper Sections

Last Submitted Papers

- Development of an automated multi-stage continuous reactive crystallization system with inline PATs for high viscosity process

- Prexima 300. Determination of the effect of the pre-compression force on the tablet hardness, obtained at constant value of the main compression force

- FMECA Risk Analysis background for calibrated containment solutions

- Fractional Fractorial Design

- Validation of Cleaning in Place

- Coating optimization: equipment features to prevent defects

- Drug layering: the impact of up-scaling

Relive

Achema

The exclusive tech videos shot during Achema 2024 are now available on our dedicated website